Perennially reservations weird behaviour whilst not configured correctly

Whilst using RDM disks in your environment you might notice long (even extremely long) boot time of your ESXi hosts. That’s because ESXi host uses a different technique to determine if Raw Device Mapped (RDM) LUNs are used for MSCS cluster devices, by introducing a configuration flag to mark each device as perennially reserved that is participating in an MSCS cluster. During the start of an ESXi host, the storage mid-layer attempts to discover all devices presented to an ESXi host during the device claiming phase. However, MSCS LUNs that have a permanent SCSI reservation cause the start process to lengthen as the ESXi host cannot interrogate the LUN due to the persistent SCSI reservation placed on a device by an active MSCS Node hosted on another ESXi host.

Configuring the device to be perennially reserved is local to each ESXi host, and must be performed on every ESXi host that has visibility to each device participating in an MSCS cluster. This improves the start time for all ESXi hosts that have visibility to the devices.

The process is described in this KB and is requires to issue following command on each ESXi:

esxcli storage core device setconfig -d naa.id –perennially-reserved=true

You can check the status using following command:

esxcli storage core device list -d naa.id

In the output of the esxcli command, search for the entry Is Perennially Reserved: true. This shows that the device is marked as perennially reserved.

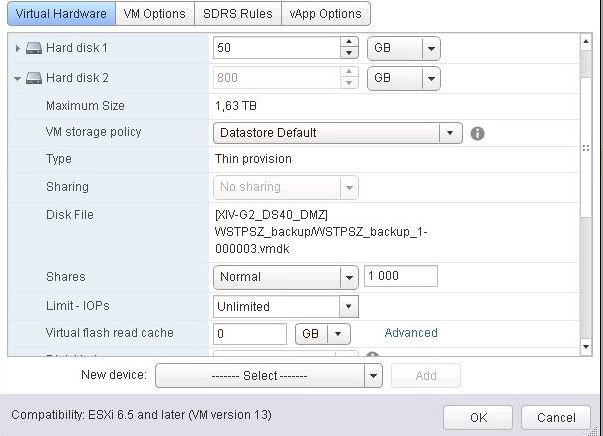

However, recently I came across on a problem with snapshot consolidation, even storage vMotion was not possible for particular VM.

Whilst checking VM settings one of the disks was locked and indicated that it’s running on a delta disks which means there is a snapshot. However, Snapshot manager didn’t showed any snapshot, at all. Moreover, creating new and delete all snapshot which in most cases solves the consolidation problem didn’t help as well.

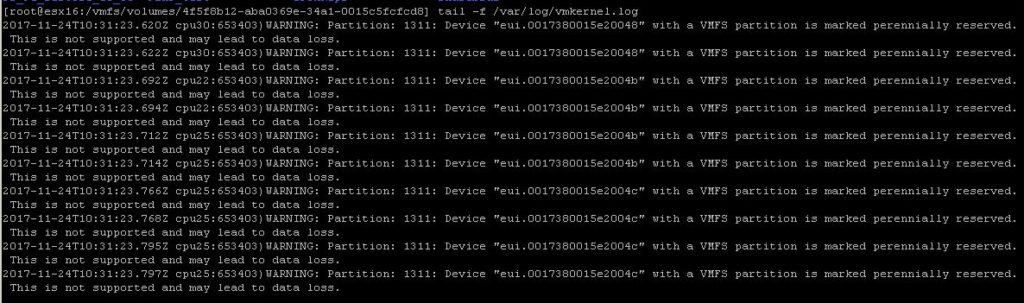

In the vmkernel.log while trying to consolidate VM lots of perenially reservation entries was present. Which initially I ignored because there were RDMs which were intentionally configured as perennially reserved to prevent long ESXi boot.

However, after digging deeper and checking a few things, I return to perenially reservations and decided to check what the LUN which generates these warnings is and why it creates these entries especially while trying consolidation or storage vMotion of a VM.

To my surprise I realised that datastore on which the VM’s disks reside is configured as perenially reserved! It was due to a mistake when the PowerCLi script was prepared accidentially someone configured all available LUNs as perenially reserved. Changing the value to false happily solved the problem.

The moral of the story is simple – logs are not issued to be ignored 🙂